|

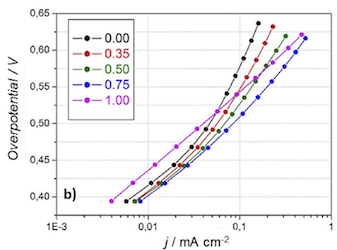

Water oxidation (WO) is an ideal reaction to explore for the design of earth-abundant electrocatalysts, due to its energy-intensive role in water splitting. In nature, the Photosystem II complex catalyzes this reaction with a Mn complex, so Mn has been explored extensively for this catalyst material. We present a fast and tunable electrodeposition synthesis for an active MnOx catalyst from an Mn(VII) precursor, unlike most electrodeposited MnOx WO catalysts which come from Mn(II). The main exploration of the paper is addition of Fe to the synthesis, showing a clear Tafel slope decrease compared to the MnOx material alone. EIS shows that the Fe-containing catalysts have improved charge transfer and charge transport. In long-term chronopotentiometry (70 min), increased stability of the film is found with the addition of Fe. The most consistent Tafel slope and highest stability is with 1.00 mM concentration of the Fe species in electrodeposition. Through extended characterization (Raman Microscopy, FESEM, UPS, XPS) we suggest the presence of Fe does not cause significant change in the original MnOx structure, instead Fe(3+) ions are incorporated into a birnessite-like MnO2 film.

|